Ziming Liu

Ziming Liu (刘子鸣)

Researcher in AI + Science

Email: zmliu1@stanford.edu

or lzmsldmjxm@gmail.com

Hi! I’m Ziming Liu (刘子鸣). I will join the College of AI at Tsinghua University as a tenure-track Assistant Professor, starting 2026 Fall (tentative).

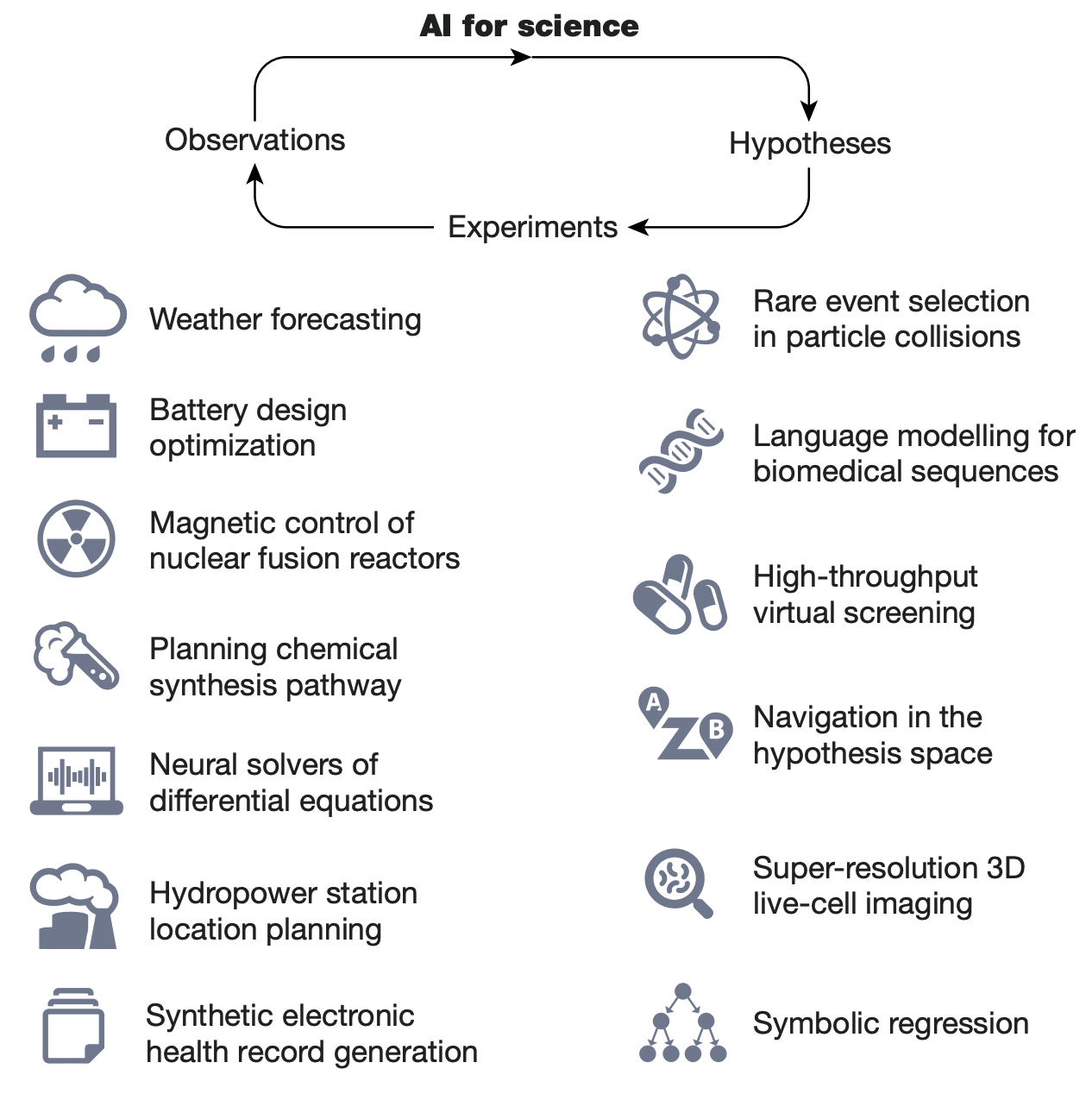

I am currently a postdoc at Stanford & Enigma, working with Prof. Andreas Tolias. I obtained my PhD from Massachusetts Institute of Technology, advised by Prof. Max Tegmark. Before that, I obtained my B.S. from Peking University. My research interests lie in the intersection of AI and Science:

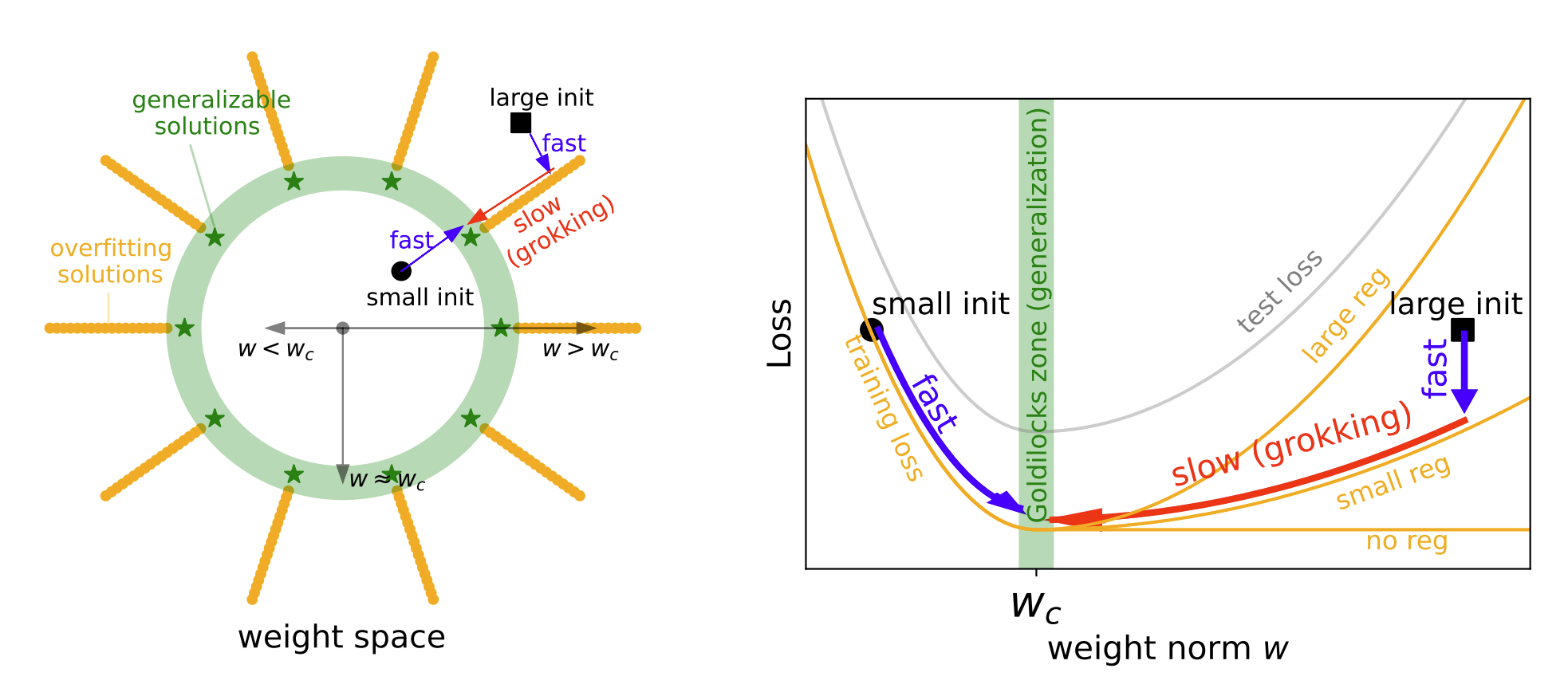

- Science of AI: Understanding AI using science. I’m interested in understanding intriguing network phenomena (e.g., grokking, neural scaling laws).

- Science for AI: Advancing AI using science. When the scaling of the current paradigm plateaus, it is time to focus back on fundamental science (for AI).

- AI for Science: Advancing science using AI. I’m excited about inventing accurate/interpretable AI4Science models and curiosity-driven AI scientists.

Consider reading these Quanta articles if you want to get some taste:

- Science of AI (Grokking): How Do Machines ‘Grok’ Data?

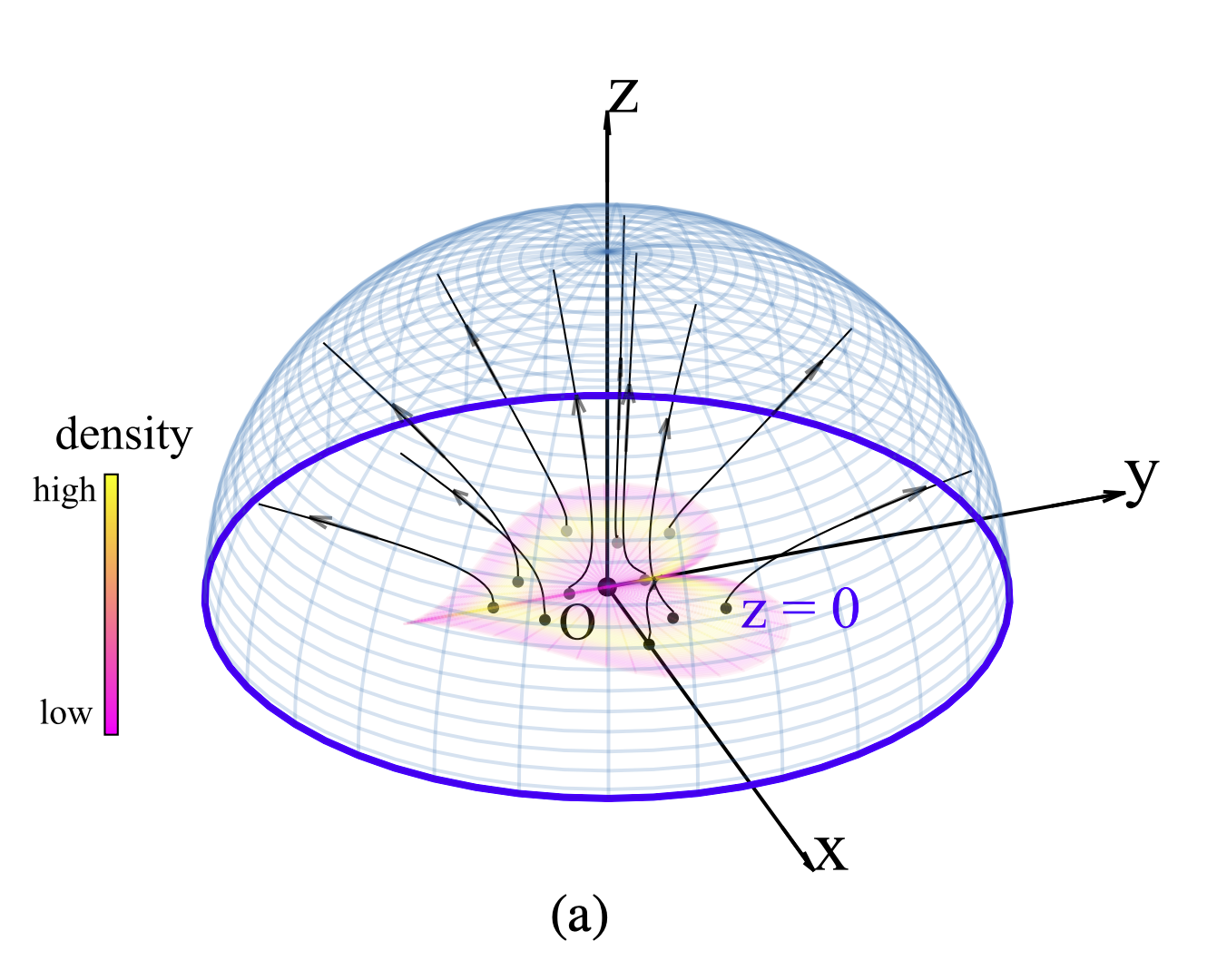

- Science for AI (Poisson Flow): The Physical Process That Powers a New Type of Generative AI

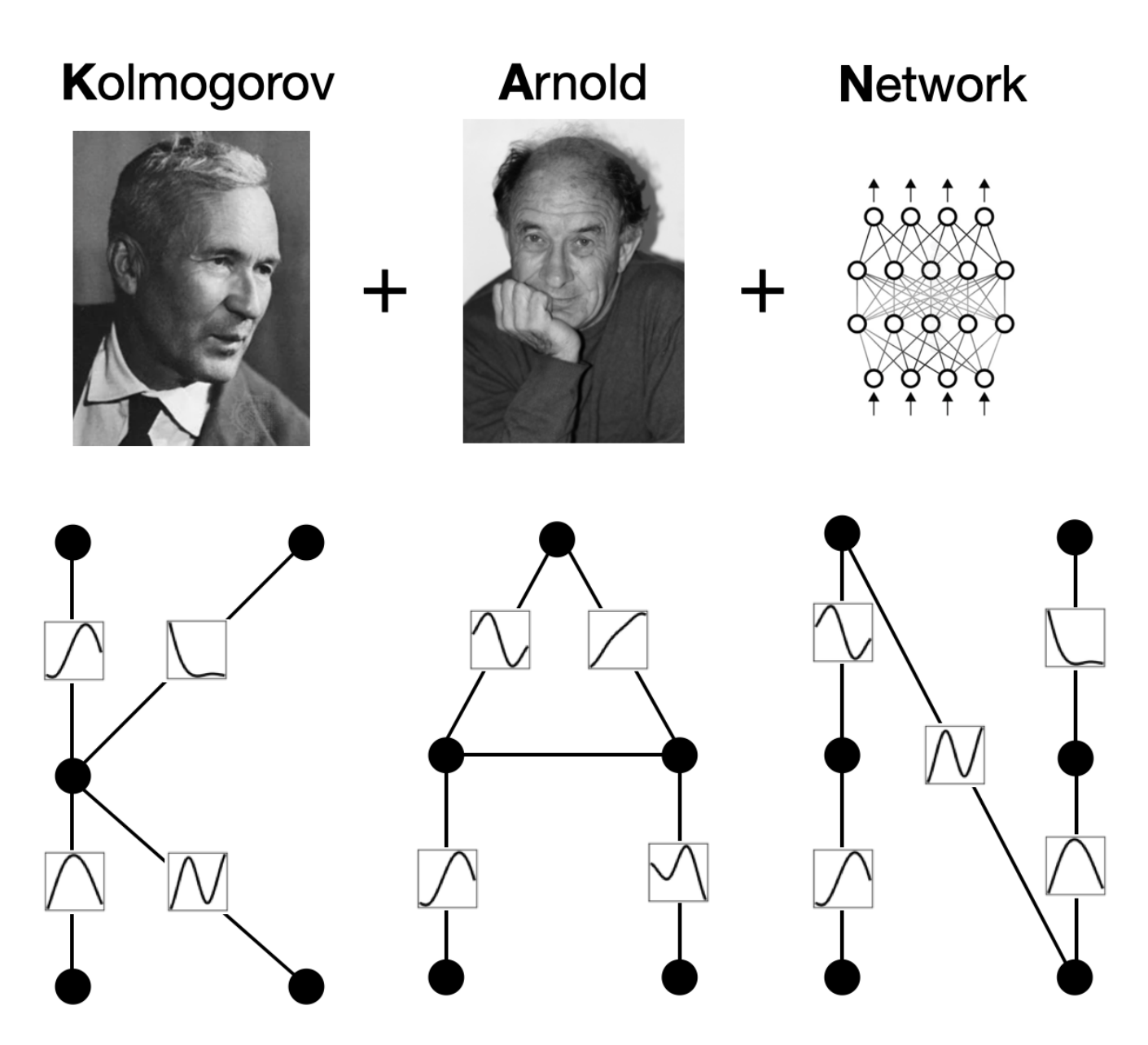

- AI for Science (KAN): Novel Architecture Makes Neural Networks More Understandable

My research philosophy is best described by John Hopfield’s inspiring words in his Nobel lecture:

[About physics (of AI)] Physics is not defined by subject matter, but is a point of view that the world around us (with effort, ingenuity and adequete resources) is understandable in a predictive and reasonably quantitative fashion.

[About choosing problems] I am now looking for a big problem whose resolution and understanding will be of significance far beyond its normal disciplinary boundaries and will reorganize the fields from which they came.

latest posts

| Feb 09, 2026 | Memory 1 -- How much do linear layers memorize? |

|---|---|

| Feb 08, 2026 | Transformers don't learn Newton's laws? They learn Kepler's laws! |

| Feb 07, 2026 | When I say "toy models", what do I mean? |

selected publications

-

-

Seeing is believing: Brain-inspired modular training for mechanistic interpretabilityEntropy, 2023

Seeing is believing: Brain-inspired modular training for mechanistic interpretabilityEntropy, 2023 -

-

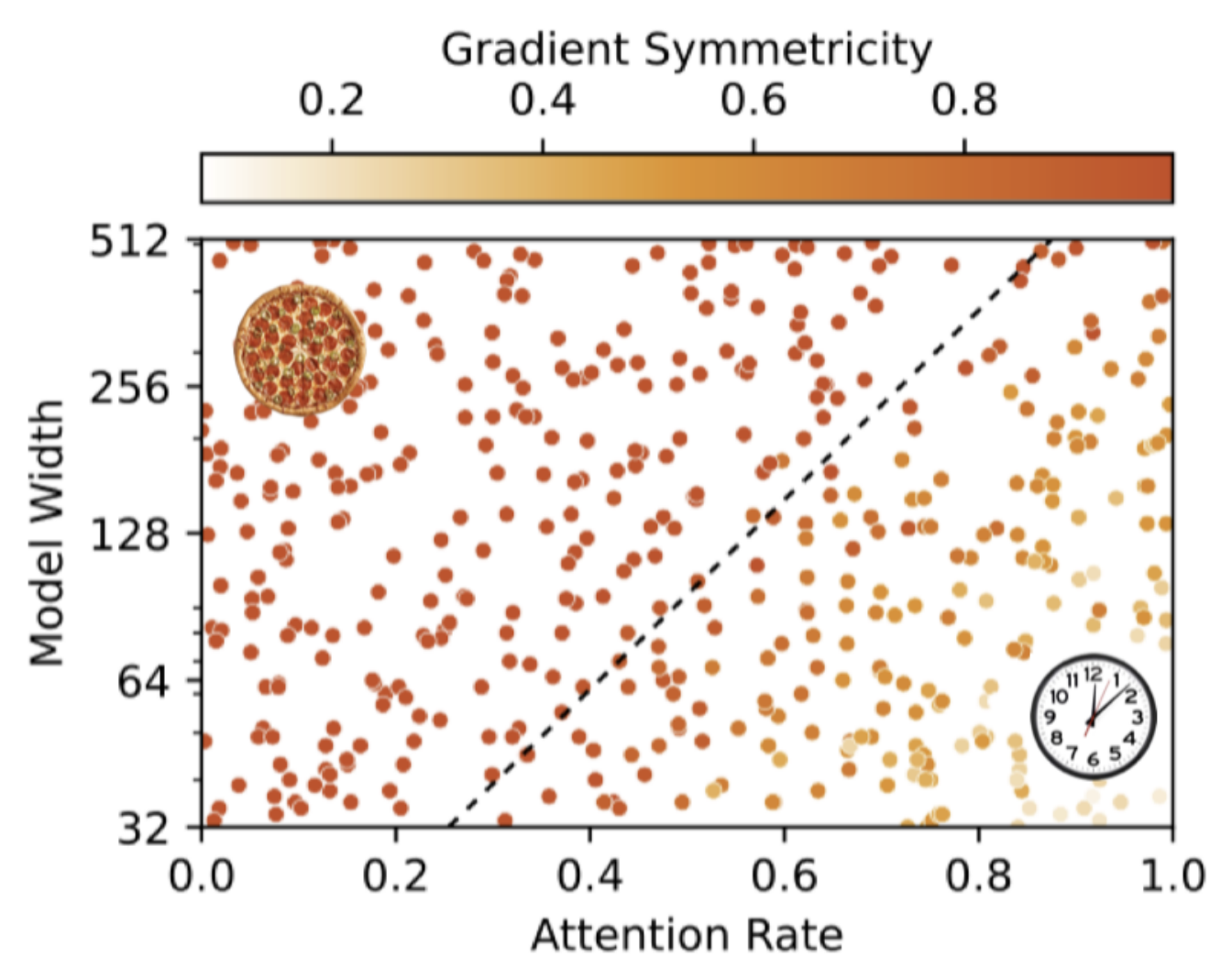

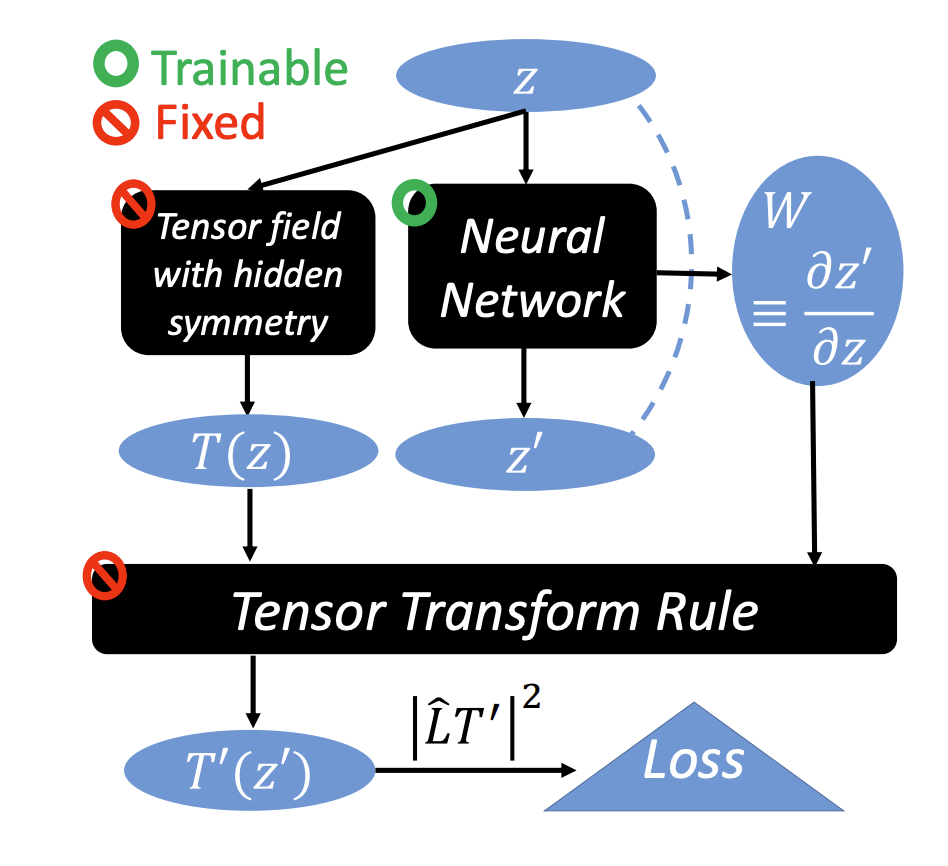

The Clock and the Pizza: Two Stories in Mechanistic Explanation of Neural NetworksIn Thirty-seventh Conference on Neural Information Processing Systems , 2023

The Clock and the Pizza: Two Stories in Mechanistic Explanation of Neural NetworksIn Thirty-seventh Conference on Neural Information Processing Systems , 2023 -

Omnigrok: Grokking Beyond Algorithmic DataIn The Eleventh International Conference on Learning Representations , 2023

Omnigrok: Grokking Beyond Algorithmic DataIn The Eleventh International Conference on Learning Representations , 2023 -

-

-