Ziming Liu

Ziming Liu (刘子鸣)

lzmsldmjxm@gmail.com

I’m looking to hire two PhD students who will start in Fall 2027 at Tsinghua University.

Hi! I’m Ziming Liu (刘子鸣). I will join the College of AI at Tsinghua University as a tenure-track Assistant Professor, starting 2026 Fall. I did my postdoc in AI + Neuroscience at Stanford with Andreas Tolias. I did my PhD in AI + Physics at MIT with Max Tegmark. Before that, I obtained my B.S. in physics from Peking University in 2020. Get my CV here.

I’m currently devoted to two related things:

- “Physics of AI” – using first principles to understand the structure (“Google map”) of AI research/idea/design space.

- “AI for AI” – building an AI agent that can intelligently navigate through the map of ideas, like human AI researchers.

I believe both the AI agent and the “physics of AI” knowledge base will become key ingredients of a safe and efficient AGI, which naturally supports curiosity-driven continual learning.

Over a longer time scale, my research interests generally lie in the intersection of AI and Science:

- Science of AI: Understanding AI using science. I’m interested in understanding intriguing network phenomena (e.g., grokking, neural scaling laws).

- Science for AI: Advancing AI using science. When the scaling of the current paradigm plateaus, it is time to focus back on fundamental science (for AI).

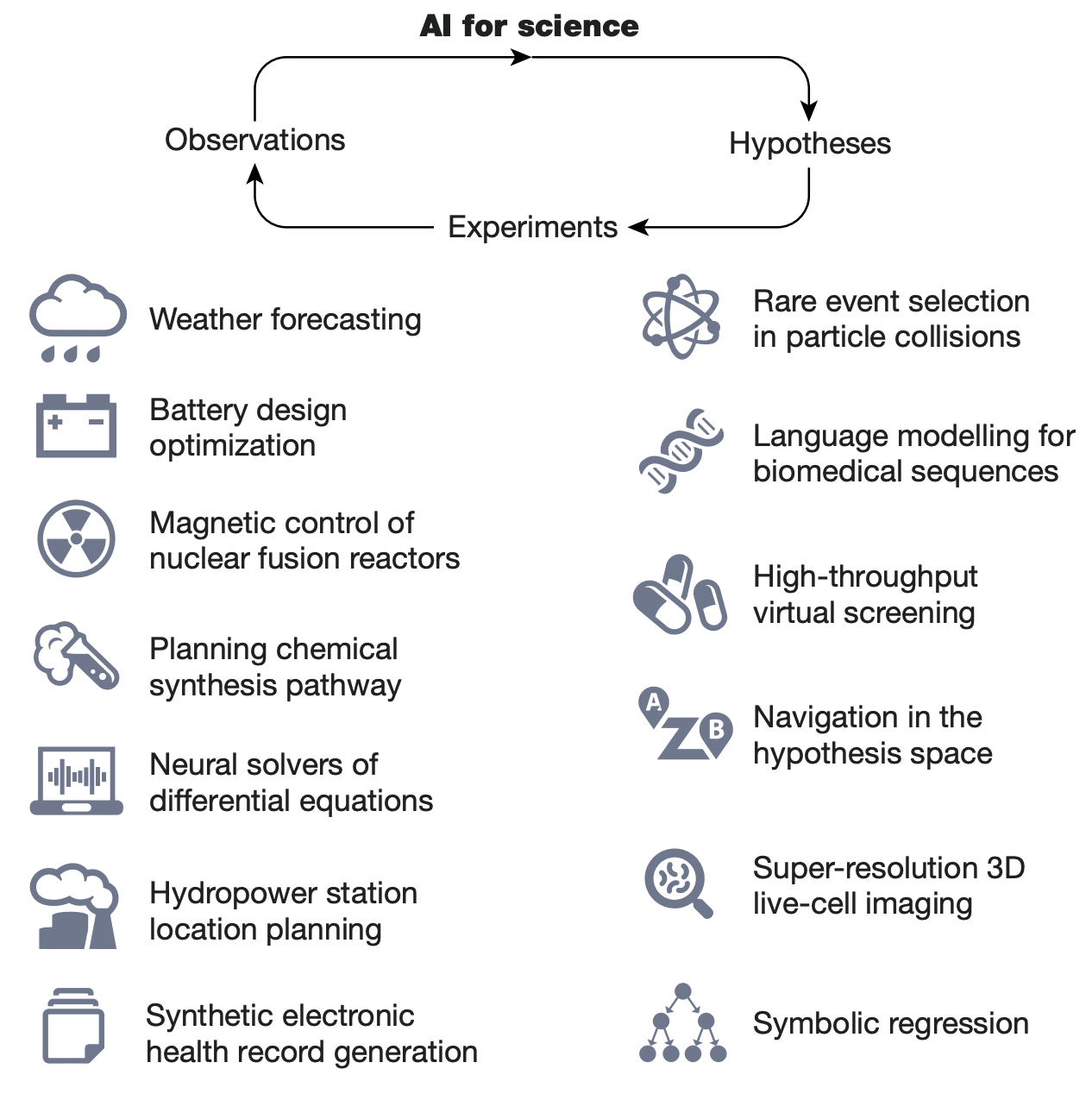

- AI for Science: Advancing science using AI. I’m excited about inventing accurate/interpretable AI4Science models and curiosity-driven AI scientists.

Consider reading these Quanta articles if you want to get some taste of my research:

- Science of AI (Grokking): How Do Machines ‘Grok’ Data?

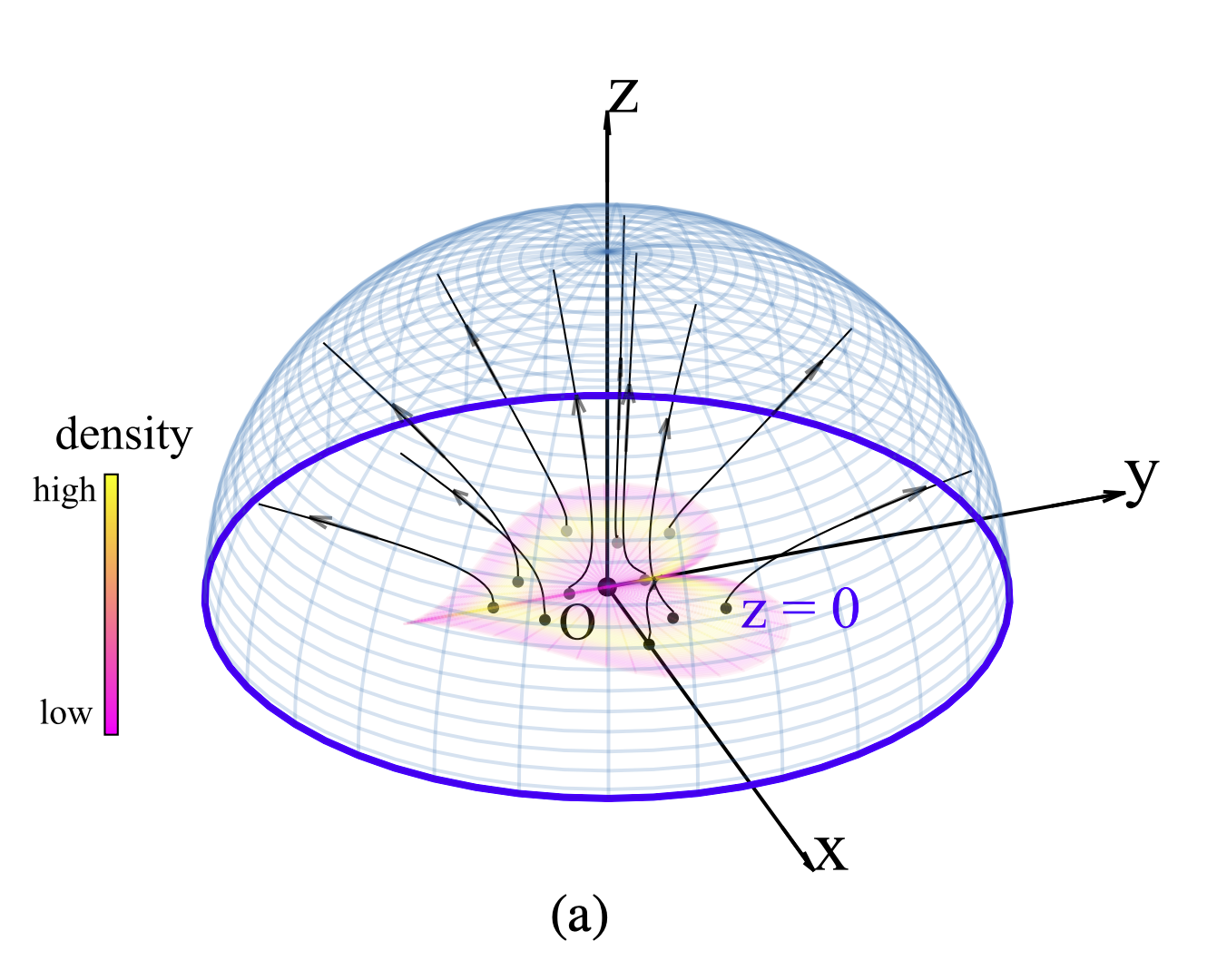

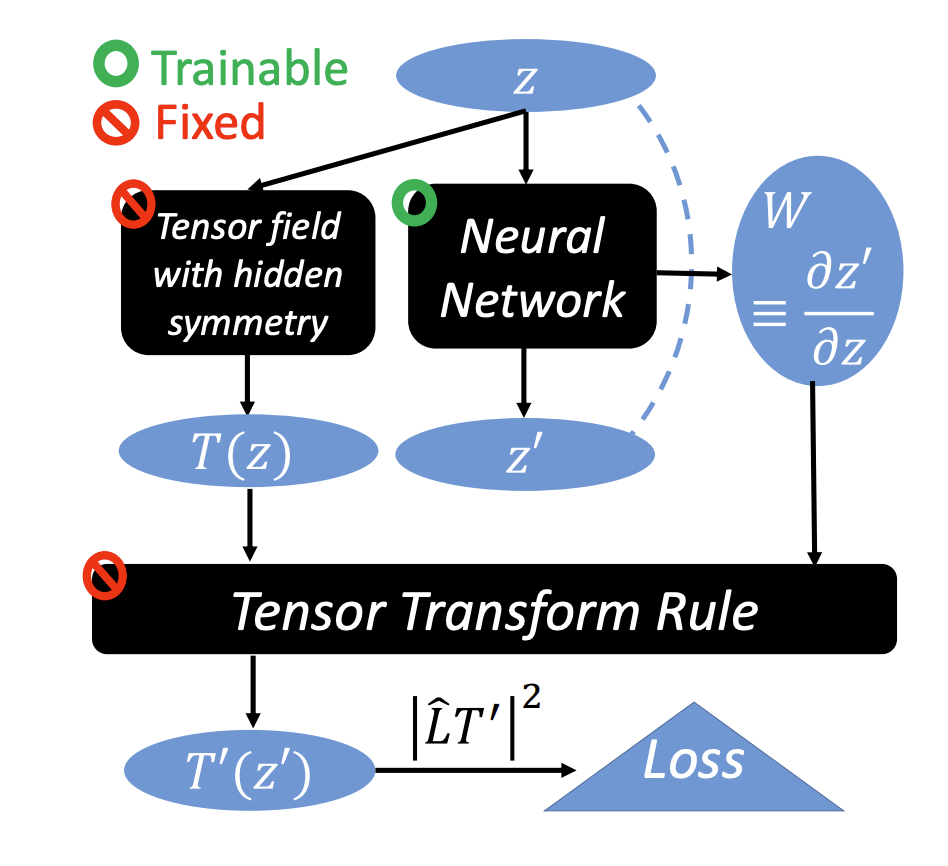

- Science for AI (Poisson Flow): The Physical Process That Powers a New Type of Generative AI

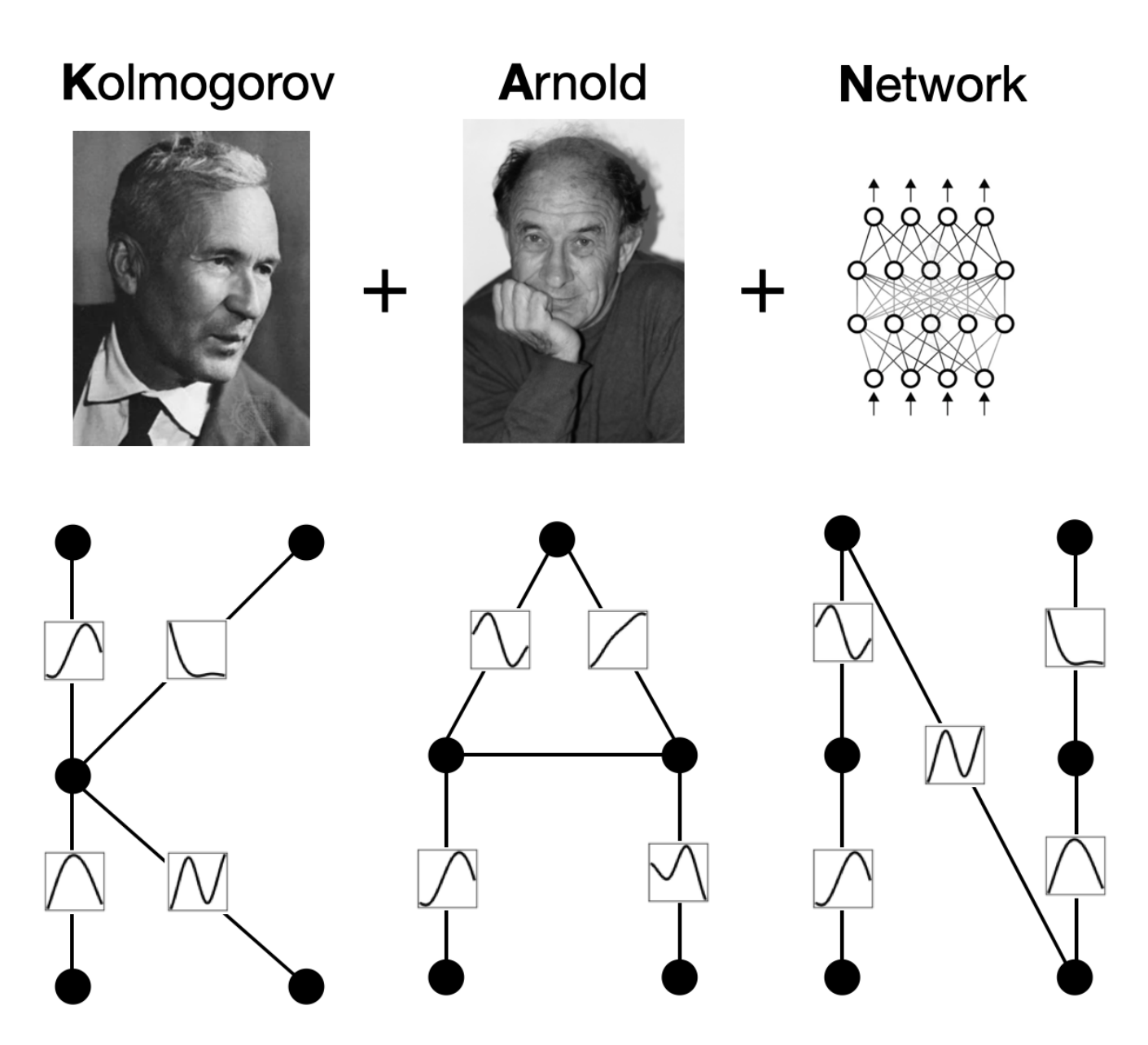

- AI for Science (KAN): Novel Architecture Makes Neural Networks More Understandable

latest posts

selected publications

-

-

Seeing is believing: Brain-inspired modular training for mechanistic interpretabilityEntropy, 2023

Seeing is believing: Brain-inspired modular training for mechanistic interpretabilityEntropy, 2023 -

-

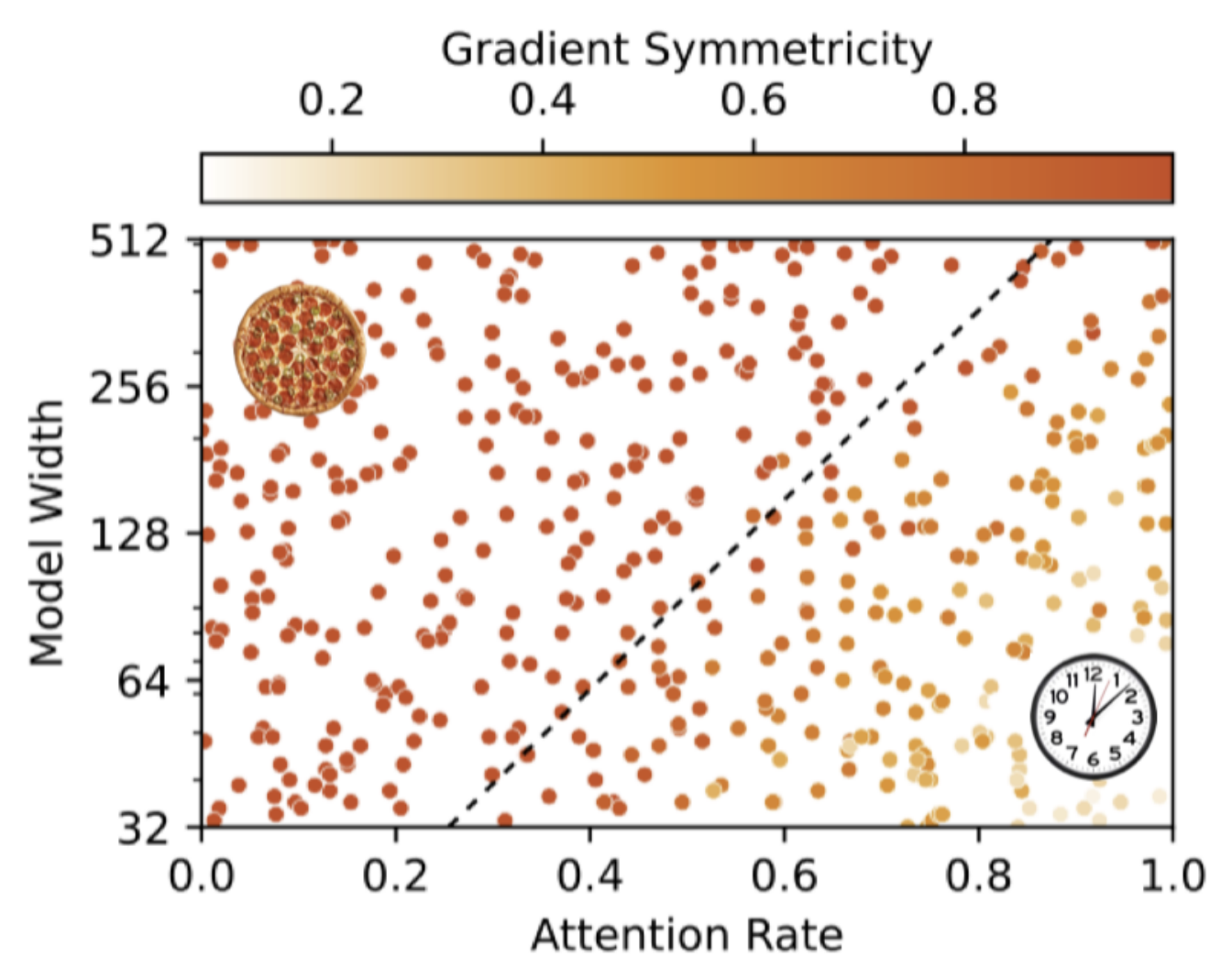

The Clock and the Pizza: Two Stories in Mechanistic Explanation of Neural NetworksIn Thirty-seventh Conference on Neural Information Processing Systems , 2023

The Clock and the Pizza: Two Stories in Mechanistic Explanation of Neural NetworksIn Thirty-seventh Conference on Neural Information Processing Systems , 2023 -

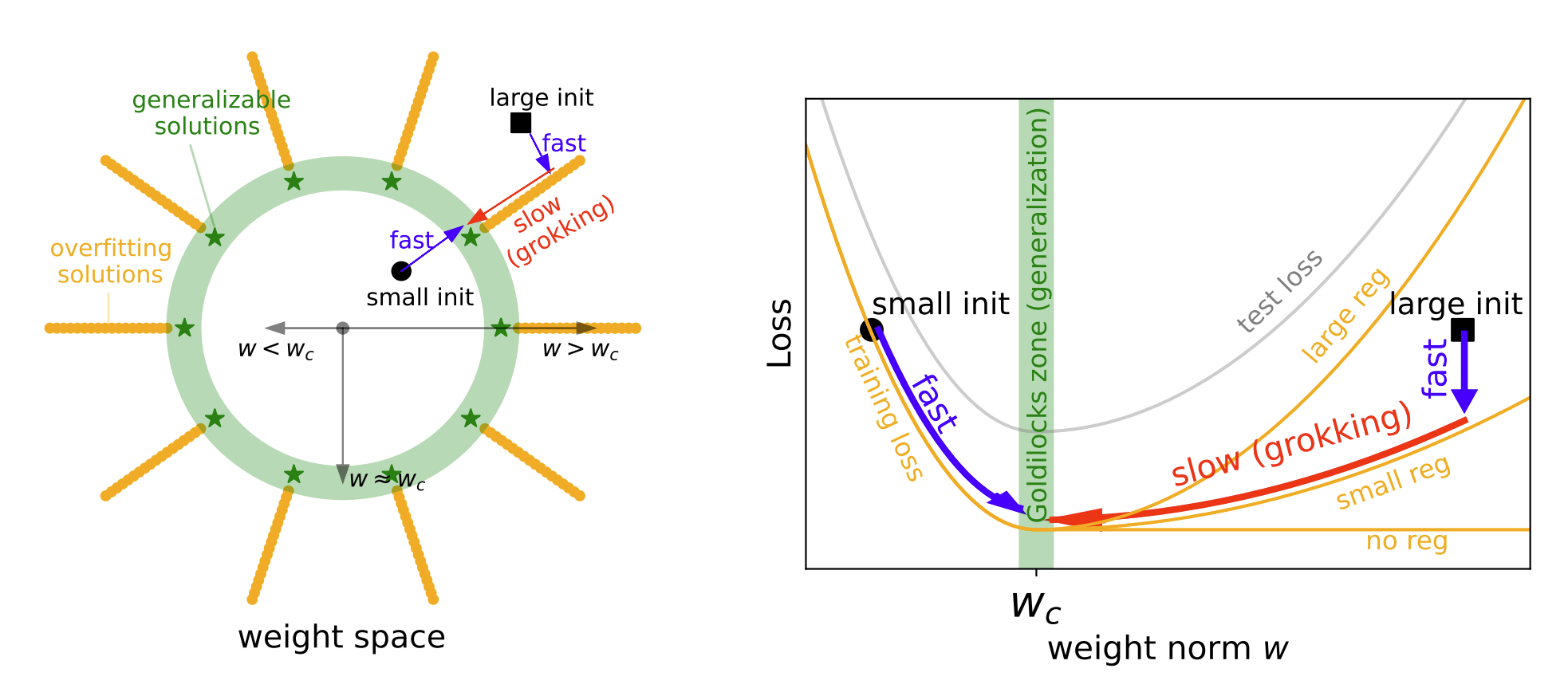

Omnigrok: Grokking Beyond Algorithmic DataIn The Eleventh International Conference on Learning Representations , 2023

Omnigrok: Grokking Beyond Algorithmic DataIn The Eleventh International Conference on Learning Representations , 2023 -

-

-