Physics 2 -- Transformers fail to maintain physical cosistency for circular motion

Author: Ziming Liu (刘子鸣)

Motivation

Most current world models and video generative models suffer from physical or semantic inconsistency. While a generated video may appear coherent for a few seconds, it often starts producing inconsistent content over longer horizons—even though the new content may still look locally plausible. The origin of this inconsistency is difficult to pin down, because both the data (natural scenes) and the models are largely black boxes.

In this article, we instead study a toy dataset—circular motion—which is highly controlled and well understood. By training transformers on this toy dataset, we uncover failure modes in the generated trajectories that closely resemble the inconsistency problems observed in large-scale world models.

Problem setup

We consider a circular motion dataset defined as follows. The radius is sampled as \(r \sim U[0.15, 0.35]\), and the angle evolves as \(\varphi_t = \omega t + \varphi_0,\) where \(\varphi_0 \sim U[0, 2\pi]\), the angular velocity is \(\omega = 0.5\), and \(t = 1, 2, \ldots, 50\). This corresponds to Cartesian coordinates \(x_t = r \cos \varphi_t, \quad y_t = r \sin \varphi_t.\)

We follow the tokenization scheme of Vafa et al.. Each coordinate pair \((x, y)\) is discretized into tokens, with \(x\) and \(y\) tokenized independently. Specifically, the \(x\) coordinate is mapped to \(\lfloor N x \rfloor\), and the \(y\) coordinate is mapped to \(\lfloor N y \rfloor + N\). In total, this yields \(2N\) distinct tokens.

The transformer takes in and outputs two tokens per time step (corresponding to \(x\) and \(y\)), rather than a single token. The training objective is the standard next-token(s) prediction cross-entropy loss.

Results

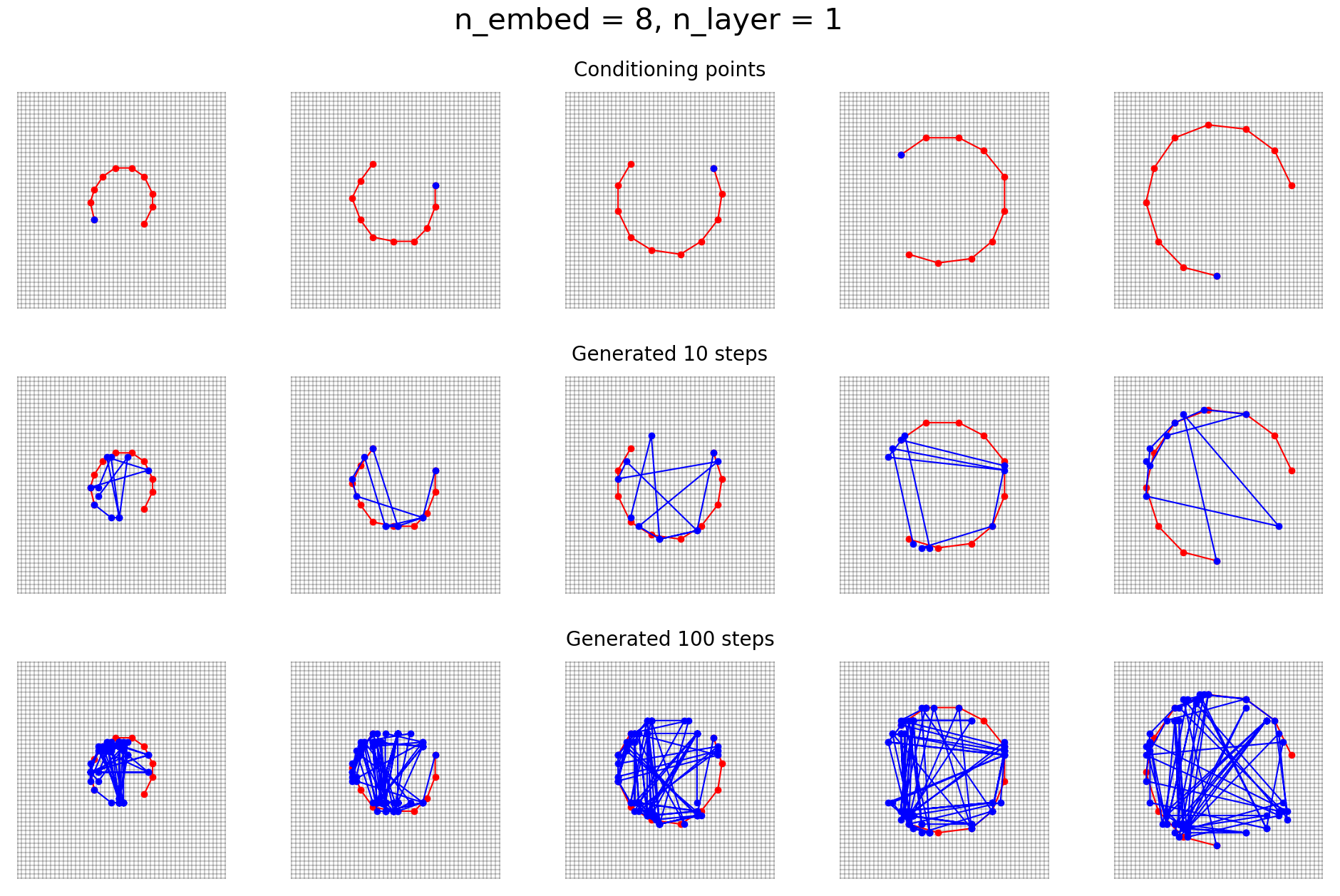

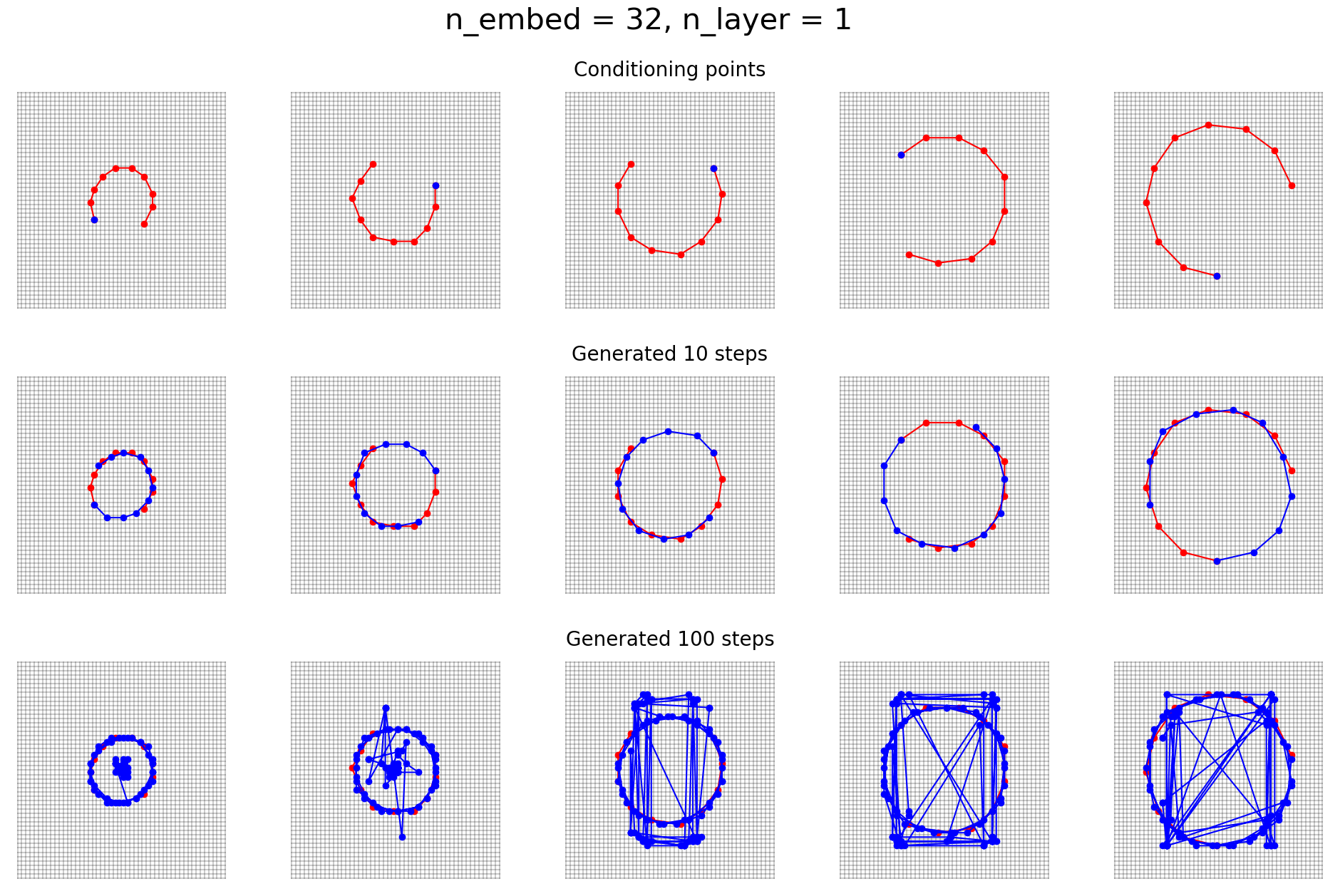

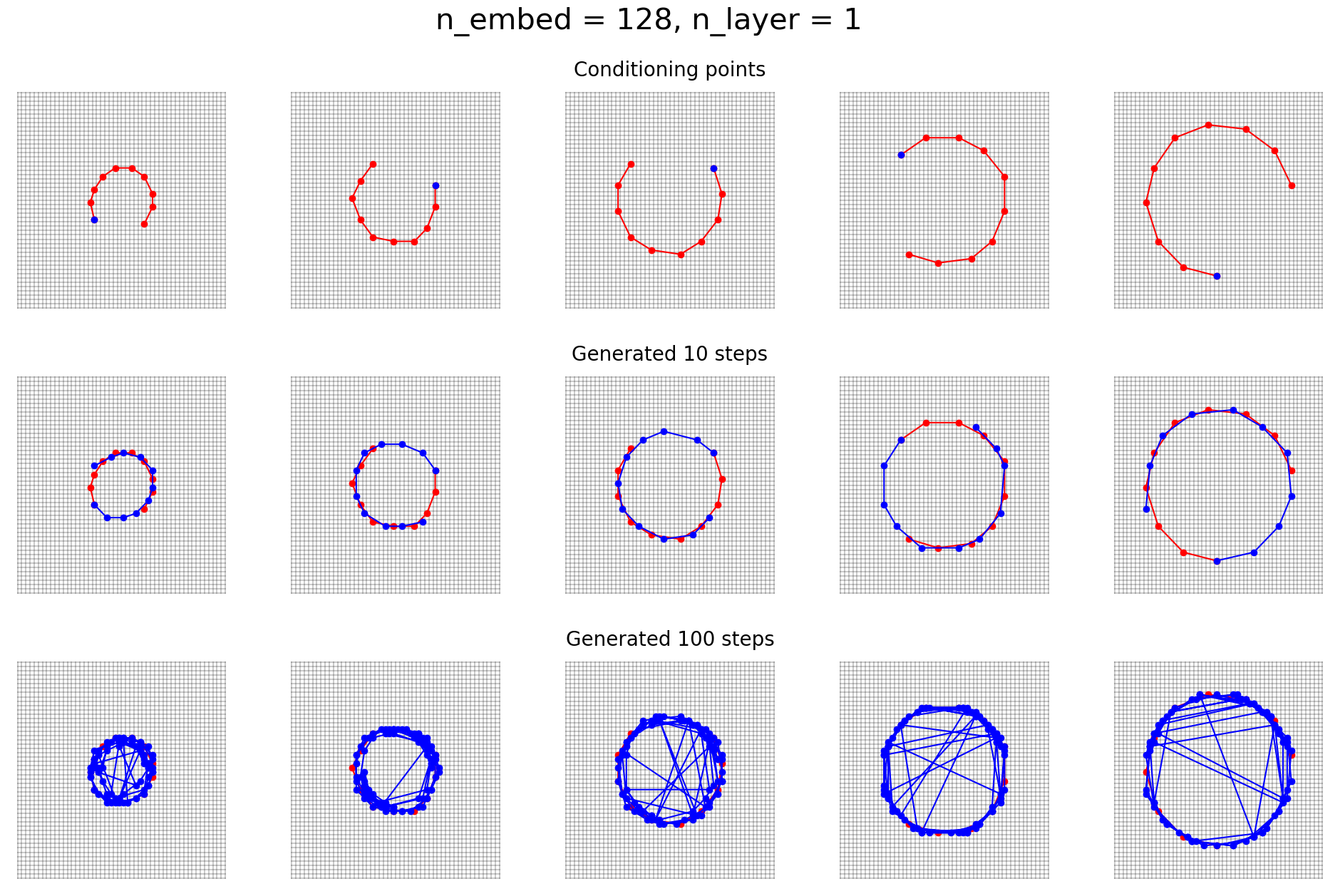

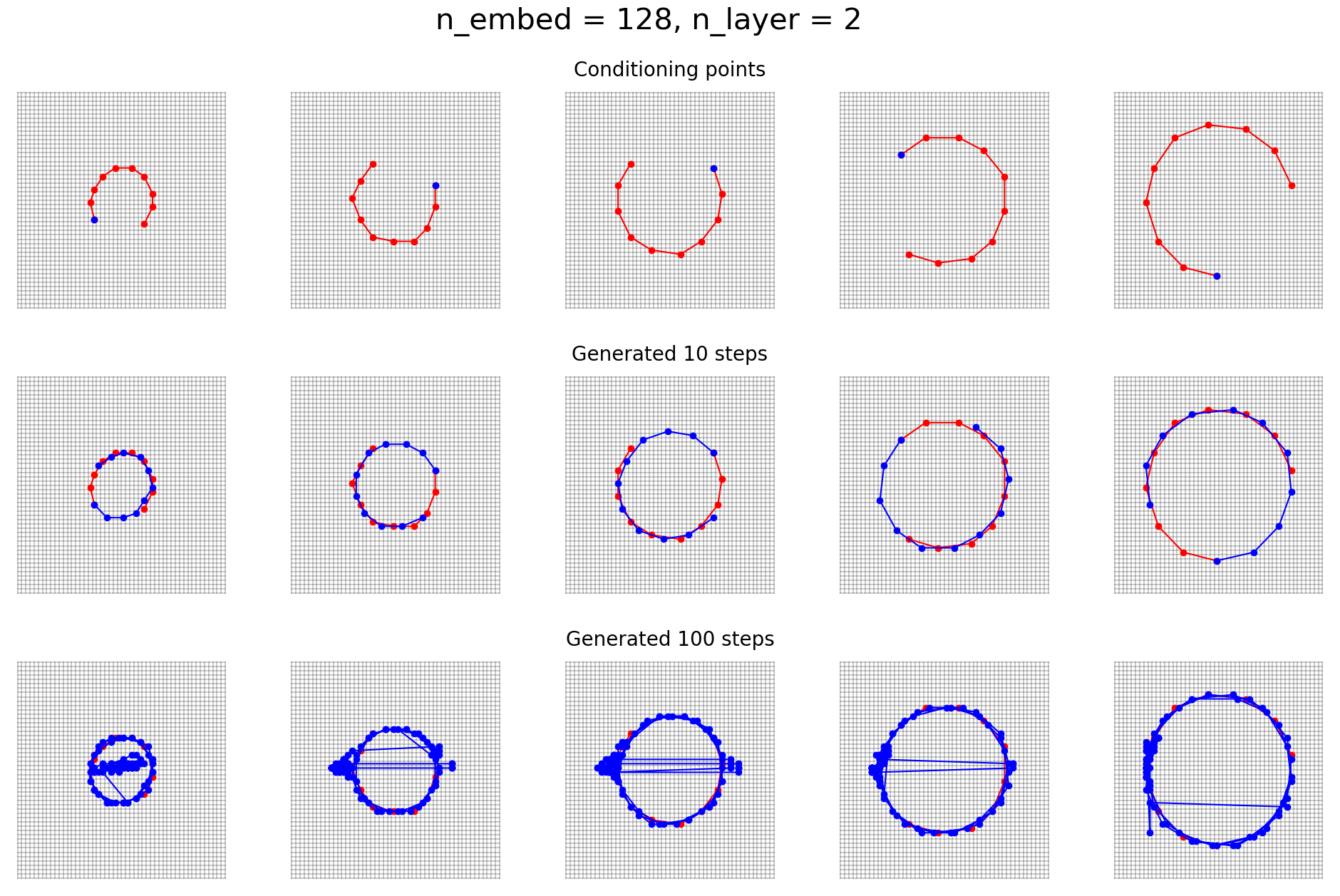

After training, we condition the model on the first 10 points (shown in red), and then generate the next 90 points autoregressively (shown in blue).

We show results for models of different sizes below.

Comment

When the model is sufficiently expressive, we observe the following behavior:

- During the first few generation steps, the object follows the correct circular trajectory.

- Over longer generation horizons, the object remains approximately on the circle, but begins to jump all over it.

We hypothesize that:

- The transformer is able to learn a conservation law—in this case, conservation of radius—and infer its value from the context.

- While the model initially follows the correct motion law (circular motion), it fails to maintain this dynamics over long contexts, leading to trajectory inconsistency.

Code

Google Colab notebook available here.

Citation

If you find this article useful, please cite it as:

BibTeX:

@article{liu2026physics-2,

title={Physics 2 -- Transformers fail to maintain physical cosistency for circular motion},

author={Liu, Ziming},

year={2026},

month={February},

url={https://KindXiaoming.github.io/blog/2026/physics-2/}

}

Enjoy Reading This Article?

Here are some more articles you might like to read next: